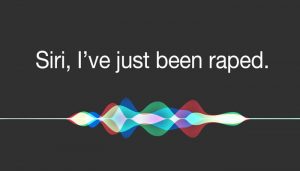

There’s a new study called “Smartphone-Based Conversational Agents and Responses to Questions About Mental Health” by Miner, Milstein, Schueller, Hegde, and Mangurian (2016) that examines the response of “conversational agents” like Siri to mental health, physical health, and interpersonal violence questions. Why does this matter? As authors note, according to Pew Research, 62% of smartphone users use their phones to look up information about a health condition (Smith, 2015). Thus is makes a lot of sense to see what Siri and her co-conversational agents are saying when we ask really hard questions.

There’s a new study called “Smartphone-Based Conversational Agents and Responses to Questions About Mental Health” by Miner, Milstein, Schueller, Hegde, and Mangurian (2016) that examines the response of “conversational agents” like Siri to mental health, physical health, and interpersonal violence questions. Why does this matter? As authors note, according to Pew Research, 62% of smartphone users use their phones to look up information about a health condition (Smith, 2015). Thus is makes a lot of sense to see what Siri and her co-conversational agents are saying when we ask really hard questions.

Informational searches vary considerably. For example, “what is bird flu” or “what are hangnails” are quite different from asking for guidance or pleas for help, such as “I want to commit suicide.” Kind of surprisingly, however, it turns out that Siri, Google and their digital companions are much worse at these questions than you might think. They vary from having ineffective (although quasi-empathetic) responses such as “I’m sorry to hear that” to irrelevant, wrong or simply no answers at all.

Miner et al’s findings are pertinent and important as long as we, as a society, don’t get lost in the weeds and forget the big picture. It’s easy to assume that Siri and friends should get better answers (and they probably should, to some degree), but we need to ask some higher level questions. We should not lose sight of the important ethical and legal implications of having voice recognition algorithms give out of context advice. All of the situations the researchers queried would be best served by dialing 911 and talking to a trained emergency response professional. Technology has limitations and we shouldn’t be fooled by the fact that our phones can talk.

While Pew Research doesn’t distinguish between information searches and asking for emergency help. It is this narrow subsection of questions that the researchers are testing. Thus, we don’t know how many of the 62% of smartphone users are posing these kinds of urgent questions. Yet, as the researchers note, their results suggest that these voice services may be missing a big opportunity to provide some basic social services. It is certainly possible that algorithms could be expanded to include basic referrals to emergency services, much the way a 911 call would. However, how do we build this system of questions and responses to make any sense? Psychologists in particular grapple with the ramifications in how advice can be ethically leveraged using technology.

User expectations of where to get help and the value and validity of the help they receive are important elements of this equation. How many people actually expect their smartphone to know what to do if they’ve been raped or are depressed? Does the human tendency to anthropomorphize technology, particularly ones that talk to us, make that a helpful or dangerous place to provide information that lacks any context beyond geographic location? There are over 90,000 health care apps, ranging from diagnostic tools to wellness apps so we’re not strangers to relying on our smartphones for health and safety information. This assumption of care can be an important factor as well as a determinant of behavior. If Zoey Deschanel can ask about rain, should we expect Siri to know about depression, too?

User expectations of where to get help and the value and validity of the help they receive are important elements of this equation. How many people actually expect their smartphone to know what to do if they’ve been raped or are depressed? Does the human tendency to anthropomorphize technology, particularly ones that talk to us, make that a helpful or dangerous place to provide information that lacks any context beyond geographic location? There are over 90,000 health care apps, ranging from diagnostic tools to wellness apps so we’re not strangers to relying on our smartphones for health and safety information. This assumption of care can be an important factor as well as a determinant of behavior. If Zoey Deschanel can ask about rain, should we expect Siri to know about depression, too?

While the study points out that there are clearly missed opportunities to help people, there are a great number of ethical issues, not to mention potential legal liabilities, in providing anything but relatively unhelpful answers, such as “please dial a local emergency number” or to, at best, provide a number for a relevant resource (if Siri got the question right), from emergency services to rape hotlines. I could argue that this is as much a case for media literacy and the importance of educating users about the limitations of online information sources, not to mention Siri’s voice recognition. Should Siri and her buddies educate people on the potential inadequacies of their information? (I have a vision of the best intentions turning into solutions with 30 seconds of legal disclaimer preceding any advice—we often make something equally unusable by trying to solve problems without considering the big picture. Usability is rarely the concern of the legal department.)

Given all the talk of big data and oversharing, we should also consider that it is very “big brother” to assign responsibility to technology (or to Apple or Google or whomever) to provide this level of care. It’s easy to see how Siri or Google could recite the symptoms for a stroke, but wouldn’t the person have been better off calling 911 immediately? Does it violate our privacy? Providers are often legally required to follow up on cases that involve the potential for personal harm. Does Siri have the same obligation to share with an authority? Do we want to be tracked for making any such query to Siri? Mental health clinicians currently protect themselves and their patients by routinely including the following message when not available to answer a call in person: “if this is an emergency, do not leave a message. Please hang up and dial 911.” Maybe Siri and friends should just do the same.

None of this should take away from the study. And to their credit, the researchers address many of these issues in the study’s limitations, such as language and culture and the difficulty of a building algorithms that can appropriately triage information and make judgments about the nature and seriousness of a situation. The study does, however, raise important issues about the current use and limitations of technology, individual rights and responsibilities, social outreach, and filling gaps in the social divide for people who don’t have the ability to find or access to other services.

We will continue to face new horizons with tough questions that we have to answer before our smartphone can. We should, however, avoid the kneejerk tendency to solve these problems one by one without a larger discussion. There is a much larger question here. It goes far beyond the presumption that smartphones need to learn what to say to hard questions. There is a myriad of mental and physical health issues and instances of interpersonal violence that are often highly subjective and vary in degree of urgency and potential for personal injury or harm. Maybe we can start with just teaching smartphones to say, please dial 911 and turn your location feature on.

References

Miner, A. S., Milstein, A., Schueller, S., Hegde, R., & Mangurian, C. (2016). Smartphone-Based Conversational Agents and Responses to Questions About Mental Health, Interpersonal Violence and Physical Health. JAMA Internal Medicine.

Smith, A. (2015). US Smartphone Use in 2015. Pew Research Center, 18-29. Retrieved March 13, 2016 from http://www.pewinternet.org/2015/04/01/us-smartphone-use-in-2015/.

Dr. Pamela Rutledge is available to reporters for comments on the psychological and social impact of media and technology on individuals, society, organizations and brands.

Dr. Pamela Rutledge is available to reporters for comments on the psychological and social impact of media and technology on individuals, society, organizations and brands.